Introduction: Can the Smartwatch Read Your Peace of Mind?

Every second, your smartwatch reads your pulse—but can it truly read your peace of mind? The modern wearable device has transcended its role as a simple fitness tracker to become a critical component of healthcare, leveraging sophisticated AI to detect life-threatening anomalies. From monitoring chronic conditions like hypertension to alerting caregivers to critical changes in the elderly, the devices on our wrists are tasked with delivering high-stakes diagnostic information on the most restrictive of interfaces.

This proliferation of life-critical capabilities imposes a profound ethical challenge on design and engineering: how do we ensure the immediate legibility of data under extreme circumstances without inducing unnecessary patient anxiety? The subsequent chapters argue that the next generation of wearable design must fundamentally prioritize empathy as ethics, moving beyond optimization metrics to focus on instantaneous user comprehension and the minimization of psychological harm.

I. When Accuracy Fails, Anxiety Rises: The Quantifiable Cost of False Positives

The foundational promise of wearable AI is trust. Yet, when the sophisticated algorithms intended to preserve life malfunction, the consequence is not just a missed diagnosis, but a palpable, quantifiable toll on the user's mental health.

From Fear to Trust: The Dose-Dependent Harm of False Alerts

Imagine recovering from a stroke, relying on your smartwatch for reassurance—and instead, its haptic prompts and screen alerts tell you something’s wrong, again and again. This scenario reveals the devastating consequence of false positives. A study analyzing data from the Pulsewatch trial—focused on older stroke survivors—identified a statistically significant decline in self-reported physical health ($\beta = -7.53, P < 0.02$) associated with receiving false Atrial Fibrillation (AF) alerts.

The impact is not merely anecdotal; it is dose-dependent. Participants who received more than two false alerts reported a more severe decline in perceived physical health ($P = 0.001$) and significantly reduced confidence in chronic symptom management ($P = 0.002$) compared to those who received two or fewer. The implication is stark: system inaccuracy is a direct determinant of patient self-efficacy and well-being.

This is why accuracy is not just a metric; it is an ethical safeguard.

For mass-market consumer devices connected to emergency response systems, the fundamental design principle must be to prioritize specificity over raw sensitivity, minimizing the societal cost of false activations. To achieve this, researchers are turning to specialized deep learning (DL) algorithms, such as the Ensemble LSTM-CNN model, which demonstrates high accuracy (97.23%) and anomaly detection rates (95%). Furthermore, for detecting subtle changes in complex physiological correlations (like Heart Rate, Step Count, and Sleep Time), anomaly detection models like HADA (Health Anomaly Detection Algorithm) achieve high accuracy (98.5%) and demonstrate a tendency to generate extra alerts to ensure no critical events are missed, underscoring the necessity of continuous monitoring for predictive care.

II. When Anxiety Rises, Legibility Becomes Essential

If the algorithm must, at times, fail—and thus raise patient anxiety—the interface must then be perfectly tailored to ease cognitive load and ensure immediate comprehension. This challenge is magnified in dynamic scenarios where the user's attention is fragmented and the screen is flashing amidst motion.

The Dynamics of Data: Charts Conquer Cognitive Load

A user engaged in high-intensity exercise (such as running) experiences a significant decline in cognitive performance, making the traditional display of static text fundamentally ineffective. Research confirms that charts and graphs consistently and significantly outperform plain text in enhancing both cognitive performance and user preference across all motion scenarios. The visual clarity of a chart, such as a bar graph showing heart rate zones, is essential because it allows the user to grasp complex data in a quick, understandable format while their attention is limited.

The design trade-offs here are palpable:

| Design Element | Impact on Cognitive Efficiency | User Preference | Design Conflict/Resolution |

|---|---|---|---|

| Presentation Form | Charts/Graphs are significantly more efficient than Text. | Charts/Graphs are preferred. | Resolution: Prioritize abstract visualization (e.g., bar charts) to ensure readability during High-intensity movement. |

| Animation Style | Non-animated forms yield higher performance scores. | Animated effects are preferred by users subjectively. | Conflict: Efficiency clashes with experience. Animation should be used sparingly, primarily to improve mood in low-satisfaction scenarios, rather than for critical data presentation. |

| Color Mode | Dark Mode generally results in higher performance. | Dark Mode significantly reduces user fatigue and enhances satisfaction. | Resolution: Dark Mode is recommended for long-term use, mitigating the "shaking effect" caused by extensive white backgrounds in light mode. |

This pursuit of legibility extends to critical alerts. When designing medical alerts for vulnerable populations, such as older adults, the interface must prioritize psychological comfort. For AF monitoring, the choice of a blue watch face color instead of red for abnormality was a deliberate design decision, informed by patient feedback, to avoid inducing worry.

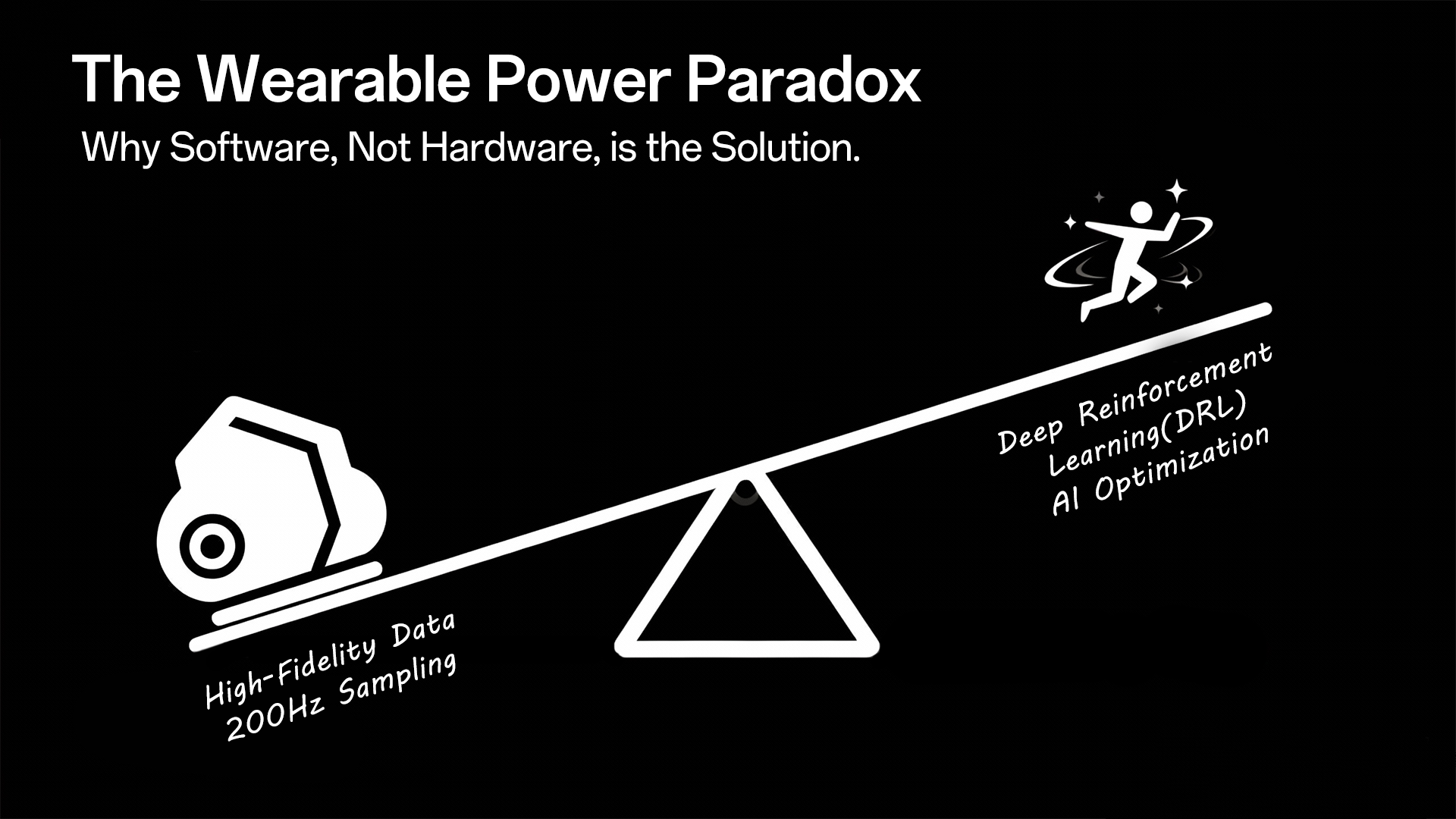

III. The Tyranny of Hardware: When the Display Must Sleep

The journey toward an empathetic, efficient interface encounters a final, formidable adversary: the physical constraints of the device itself. The goal of continuous monitoring—the core capability needed to detect subtle anomalies—is fundamentally challenged by the scarcity of battery power and internal storage.

Energy vs. Information: The Silent Algorithms

For comprehensive remote patient monitoring, high-accuracy AI models like Ensemble LSTM-CNN achieve response times of 2.5 seconds, and the cloud infrastructure (like Azure) can generate notifications within approximately 11 seconds. However, achieving this longevity and responsiveness often requires disabling key user-facing features.

The display, the very element needed to convey legibility, is a major power drain. In research prototyping for elderly monitoring systems (HADA), the two-inch LCD screen used for real-time visualization is usually disabled because it is a significant battery consumer. Energy consumption tests confirm the stark trade-off: keeping the display active reduces battery life to approximately 1 hour (at 1-second measurement frequency), whereas employing an energy-saving deep sleep mode can extend operational life to 22 hours. In this context, the display must sleep for the device to survive.

The OS Problem: A Threat to Continuous Care

Beyond battery limits, the embedded operating systems (OS) often undermine the critical functions of long-term monitoring apps. The Pulsewatch team identified that the Samsung Tizen OS automatically terminates third-party apps when the battery level drops below 20% to enter power-saving mode.

But here lies the paradox: Unless the watch is manually rebooted, the monitoring application—essential for AF detection—cannot automatically restart, leading to significant gaps in the near-continuous data stream.

This hardware tyranny forces design compromises: systems targeting populations with potential cognitive impairment (such as older adults) must be designed for passive AF monitoring, requiring minimal user attention and configuration, such as only asking the user to "hold still" when an abnormality is detected.

Conclusion: From Data to Quieter Confidence

The journey of the smartwatch, from a niche gadget to a critical health monitor, is a profound narrative of technological progress colliding with human frailty. We have learned that if the detection algorithm is flawed, it causes harm; if the interface is abstract, it causes confusion; and if the device is constrained by power, it fails to function when most needed.

We must conclude that if accuracy is science, and legibility is design—then empathy is ethics.

The ultimate goal of wearable health is not about generating more data points, but about fostering quieter confidence. Future research must resolve the fundamental conflicts between computational cost and visual comfort, ensuring that systems prioritize personalized analysis and improve precision to reduce the rate of false alerts. Only by ensuring that the technology is reliable enough to earn trust and intuitive enough to be ignored when safe, can we transition the smartwatch from a device of anxiety to a true guardian of well-being.

Hinterlasse einen Kommentar

Diese Website ist durch hCaptcha geschützt und es gelten die allgemeinen Geschäftsbedingungen und Datenschutzbestimmungen von hCaptcha.